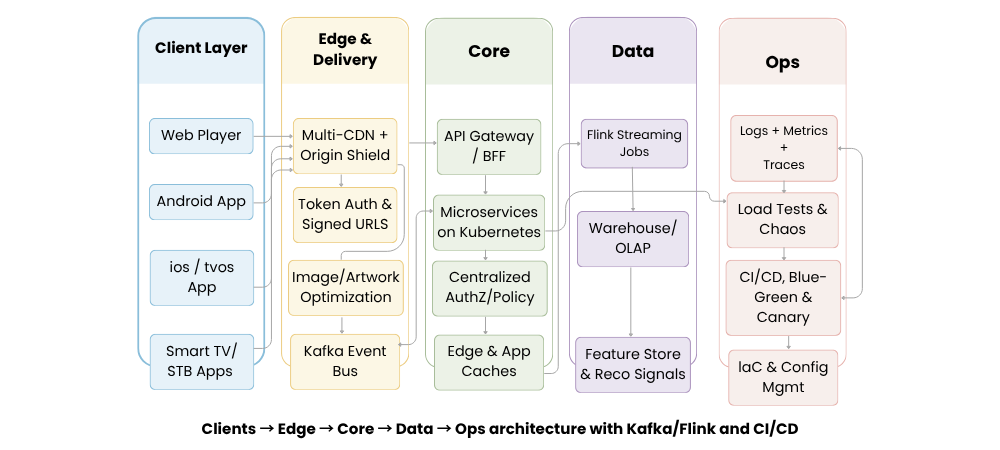

This article maps the Hotstar Tech Stack in detail: the end‑to‑end OTT architecture – client players (web/Android/iOS/TV) using ABR HLS/DASH with multi‑DRM; multi‑CDN delivery; an API gateway in front of Kubernetes microservices; Kafka + Flink streaming into an OLAP warehouse and feature store; plus the observability, CI/CD, cost, and security practices that keep streams stable at massive scale. You’ll learn which components matter, how they fit together, trade‑offs to watch, and a concise path to build a similar OTT platform with VdoCipher.

TL;DR

- Clients: Web, Android, iOS/tvOS, and TV apps with ABR streaming and multi‑DRM (Widevine/FairPlay/PlayReady).

- Edge & Delivery: Multi‑CDN with origin shield, token auth & signed URLs, DPR‑aware image optimization.

- Core Services: API Gateway/BFF in front of microservices on Kubernetes with a service mesh and layered caching.

- Data Platform: Kafka event bus → Flink streaming → OLAP warehouse → feature store powering recommendations.

- Ops & Release: Deep observability, pre‑event load/chaos tests, CI/CD with canary + blue‑green, all managed via IaC.

Table of Contents:

- What “Hotstar Tech Stack” means (scope and scale)

- Client layer: players, ABR, DRM, downloads

- Edge and delivery: multi‑CDN done right

- Core: API gateway/BFF and microservices on Kubernetes

- Data: Kafka + Flink with OLAP and a feature store

- Observability, CI/CD, and reliability

- Security and cost: what keeps it sustainable

- Component index (Hotstar Tech Stack at a glance)

- How to build a Hotstar like platform with VdoCipher

- FAQ

What “Hotstar Tech Stack” means (scope and scale)

You want the parts list and the wiring, not a press release. Hotstar lives on live‑sports spikes and everyday VOD across flaky networks and cheap devices. The stack is built to survive that: ABR clients with multi‑DRM, a multi‑CDN edge with real auth at the edge, microservices on Kubernetes behind a BFF, Kafka → Flink for real‑time signals, OLAP + a feature store for decisions, and Ops that actually gate rollouts.

Client layer: players, ABR, DRM, downloads

Reality: huge device spread and inconsistent bandwidth.

- Players: Web = MSE; Android = ExoPlayer; iOS/tvOS = AVPlayer; TVs = native SDKs.

- ABR: Keep ladders sane. Bias fast start, then climb. Kill stalls, not bitrates.

- Codecs: AVC/H.264 for reach; HEVC/H.265 when decode + licensing allow; selective AV1 where it’s worth it.

- DRM: Widevine / FairPlay / PlayReady. Online + offline licenses, device caps, expiry.

- Downloads: Background jobs locked to offline DRM policy and storage/network rules.

Edge and delivery: multi‑CDN done right

For the Hotstar Tech Stack, the edge is where scale and cost are won.

- Multi‑CDN + origin shield: Steer by RTT/availability; shields protect packagers/origins from spikes.

- Token auth & signed URLs: Short‑TTL tokens enforce entitlement at the CDN edge.

- Cache keys & TTLs: Keys encode content ID, rendition, codec, audio/subtitle, device class; targeted invalidation keeps hit‑ratio high.

- Image optimization: WebP/AVIF, DPR‑aware sizes, and variant caching reduce page weight.

Explore More ✅

VdoCipher helps over 3000+ customers from over 120+ countries to host their OTT videos securely, helping them to boost their video revenues.

Core: API gateway/BFF and microservices on Kubernetes

Catalog, playback, search, payments – all need to stay up under load.

- API Gateway/BFF: Device‑tuned payloads, coarse rate limits, request shaping. Cut round trips, especially on low‑end phones.

- Kubernetes + mesh: Autoscale, PDBs, quotas. Mesh gives mTLS, retries, timeouts, breakers.

- Identity & policy: Tokens everywhere; centralized AuthZ/OPA‑style rules so teams don’t hand‑roll auth.

- Caching: CDN → BFF cache → Redis near services → tiny in‑proc caches for the hottest keys.

- Languages: Use what fits: Java/Kotlin, Go, Node for BFF, Python around data/ML.

Data: Kafka + Flink with OLAP and a feature store

Streaming is the heartbeat.

- Kafka bus: Playback/QoE beacons, catalog, ads, experiments, ops.

- Flink jobs: Popularity, enrichments, counters, near‑real‑time materialized views.

- Serving stores: Wide‑column (write‑heavy session data), Redis (low‑latency keys), search clusters (discovery/logs), object storage (media/ML).

- Analytics & OLAP: Warehouse + lake for BI/experiments; event‑time partitions; handle late data.

- Feature store: Online KV + offline warehouse with one schema to keep ranking honest.

Observability, CI/CD, and reliability

Release safety and QoE guardrails are first‑class.

- Golden signals: Latency, errors, throughput, saturation; QoE: startup time, rebuffer ratio, avg bitrate, fatal errors.

- Tracing & logs: Distributed traces, structured logs, PII scrubbing, hot/cold retention.

- Pre‑event drills: Load + chaos for CDN failover, origin throttling, mesh stress; predictive capacity pre‑warm.

- CI/CD & IaC: Canary daily; blue‑green for critical events; everything declarative and signed.

Security and cost: what keeps it sustainable

- Content protection: Multi‑DRM, tokenized media URLs, domain/geo restriction, dynamic watermarking, concurrency limits.

- Secrets & identity: Vault, short‑TTL tokens, workload identity, default mTLS.

- Cost control: Push to CDN, scale predictively for match windows, right‑size pods, tiered + compacted streams.

Component index (Hotstar Tech Stack at a glance)

This table summarizes the Hotstar Tech Stack in one place – layers, core tech, and what each piece does. It’s the setup that lets Hotstar stream reliably to millions at once.

| Layer | Core tech | What it does | Ops notes |

|---|---|---|---|

| Clients | Web (MSE), Android (ExoPlayer), iOS/tvOS (AVPlayer), TV apps | Playback UI with ABR + multi-DRM | Offline DRM downloads where enabled |

| Edge | Multi-CDN, origin shield, token auth, signed URLs, image optimizer | Low latency + scale at the edge | Cache keys: content/rendition/codec/tracks/device |

| Core | API Gateway/BFF, microservices on Kubernetes, service mesh | Business logic and aggregation | Rate limits, retries, breakers, back-pressure |

| Caching | CDN, Redis, in-proc | Latency + cost control | Layer caches per path and TTL |

| Events | Kafka | Unified event backbone | Playback, QoE, ads, catalog, experiments |

| Streaming | Flink | Real-time transforms | Popularity, counters, enrichments |

| Storage | Wide-column DB, Redis, search clusters, object storage | Serving + analytics | Write-heavy + low-latency paths |

| Analytics | Warehouse/OLAP + data lake | BI + experiments | Event-time partitions, late data |

| ML | Feature store | Online/offline features | Recs, ranking, search |

| Observability | Logs, metrics, traces | SLOs + incident response | Pre-event drills, tracing |

| CI/CD & IaC | Canary, blue-green, infrastructure as code | Safe rollouts | Auto rollback on QoE regressions |

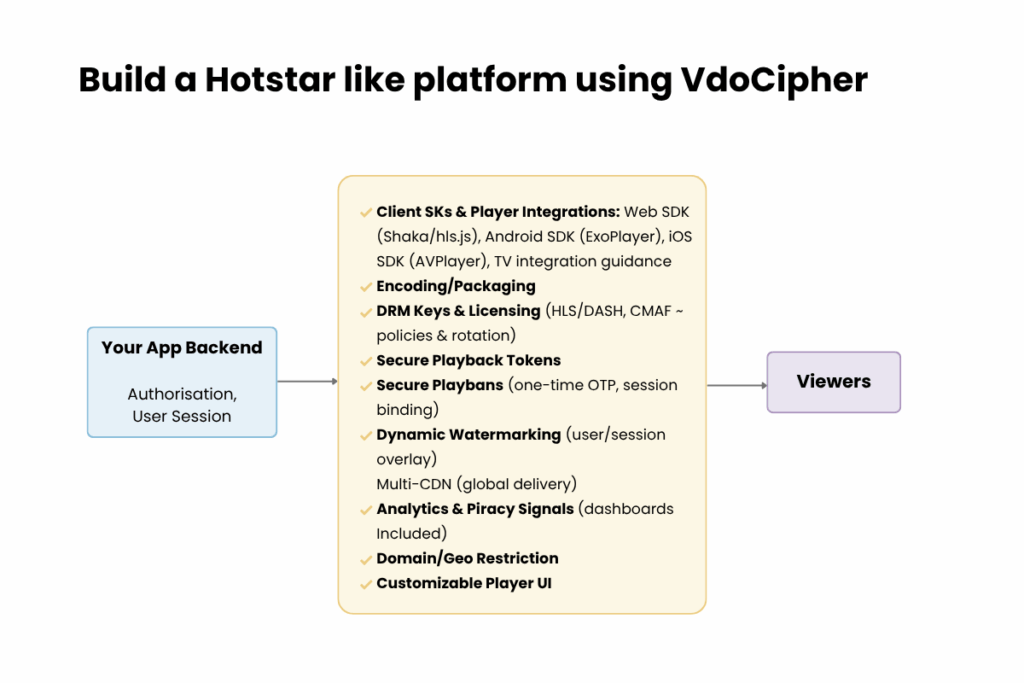

How to build a Hotstar like platform using VdoCipher

You can build a Hotstar like OTT platform with DRM encryption and video security – blocking illegal downloads and screen recording – using VdoCipher. VdoCipher is secure video hosting used by many OTT platforms; it bundles multi‑DRM, hardened playback, packaging, delivery, SDKs, and analytics so your team ships fast without running packagers or a CDN.

What VdoCipher provides

- Multi‑DRM & key management – Widevine + FairPlay license services, key generation/rotation, policy controls (offline, expiry, output rules). No separate DRM vendor wrangling.

- Secure playback & access control – One‑time tokenized playback, domain/geo restriction, session/concurrency caps, dynamic visible watermarking to deter screen capture.

- Packaging & delivery – Managed storage, transcoding, and packaging with production, and mutli-CDN.

- Client SDKs & players – Web SDK (Shaka/hls), Android SDK (ExoPlayer), iOS SDK (AVPlayer), plus TV integration guidance so you’re not hand‑rolling players.

- Analytics – QoE dashboards and piracy signals you can forward into your own metrics pipeline for SLOs.

What you still own

- Identity & entitlements (login, plans, trials, who can watch what).

- Catalog & search (metadata, multilingual, SEO pages, sitemaps).

- Payments/subscriptions (regional taxes/rights, refunds, proration).

- App UX across web/mobile/TV, including offline policy.

- Observability & QoE (dashboards, alerts, incident playbooks).

- Experimentation (A/B, recommendations, ranking, placements).

Read More: Netflix Tech Stack Explained - CDN (Open Connect), and Microservices

FAQs:-

What streaming formats does the Hotstar Tech Stack use?

HLS and MPEG‑DASH with ABR. Web uses MSE; mobile/TV use native players.

Which video codecs are typical for the Hotstar Tech Stack?

AVC/H.264 for maximum reach; HEVC/H.265 where supported; selective AV1 where decode and cost make sense.

How are recommendations and search implemented in a Hotstar‑style stack?

A feature‑store pattern blending content, behavior, context; Flink adds real‑time popularity; search uses typed schemas and autosuggest.

How is live‑event scale handled in the Hotstar Tech Stack?

Predictive pre‑warm, multi‑CDN steering, canary/blue‑green rollouts, and chaos/load drills ahead of matches.

How does DRM work across devices in the Hotstar Tech Stack?

Widevine, FairPlay, PlayReady; device‑bound/time‑bound licenses with offline policy and concurrency caps.

What do Kafka and Flink contribute to the Hotstar Tech Stack?

Kafka moves events; Flink turns streams into near‑real‑time features, counters, and alerts.

Supercharge Your Business with Videos

At VdoCipher we maintain the strongest content protection for videos. We also deliver the best viewer experience with brand friendly customisations. We'd love to hear from you, and help boost your video streaming business.

Leading Growth at VdoCipher. I love building connections that help businesses grow and protect their revenue. Outside of work, I’m always exploring new technology and startups.

Leave a Reply